AI & Energy Storage: Why It Matters for Data Centres

The Evolution of Energy Storage in Critical Infrastructure

Energy storage (ES) has always had a place in data centers with their stringent uptime, reliability, and maintenance requirements. Historically, this started with supporting infrastructure born out of the telecommunications industry and eventually made its way into other critical data infrastructure. For those old enough to remember analog landlines, they will recall the phone still worked even when the power went out due to the dc voltage (in the range of -36 to -72 Vdc) that was supplied directly by the telco provider to support a nominal -48 Vdc distribution accounting for voltage drop (a.k.a. – IR drop) and line fluctuations at the input of powered devices. This was what we might have called remote line power (RLP), most recently dubbed into the extended range of fault-managed power (FMP or Class 4 power as defined in the 2023 NFLA 70, a.k.a. National Electric Code or NEC). As the world became more reliant on data centers, the uptime and reliability requirements only increased. This necessitated the need for an ever-increasing ES footprint at all levels, from chip to grid.

Understanding the Tiered Model of Energy Storage

To better understand why the ES footprint continually increases, it helps to break down the tiers of ES support in the data center, which are all about timing, proximity, and application. ES solutions are traditionally targeting one of three applications: 1) bulk storage (i.e. – holdup); 2) peak shaving or smoothing; and 3) operational expenditure (OpEx) optimization (i.e. – run off ES when cost of power is high and/or sell back to grid in the case of renewable microgrid charging). The explosive growth of graphical processing unit (GPU)-based data centers targeting AI applications in the past few years has also brought some new applications to the forefront, such as supporting utility curtailment requirements and/or absorbing load (potentially also providing simulated machine inertia) to ensure stable grids. Applying ES to all these use cases also brings the bonus of enabling more sustainable data centers.

Response Time and Proximity: The Critical Variables

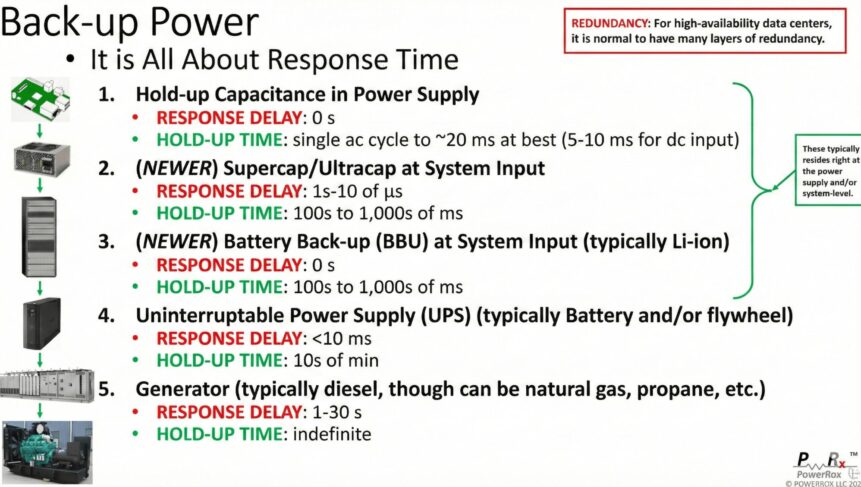

Since ES can come in all shapes and sizes (and chemistries), it is worth taking a moment to understand where and when their value comes into play. This tends to be a tiered model of deployment that is all about timing and proximity, which boils down to response time and proximity to the load. There are shorter-term needs that may require higher peak power for short durations of time, which tend to be physically located closer to the target load. These short-term ES solutions will keep the loads happy and running until longer-term solutions, which tend to be located further out as you go down the hierarchy, can provide more sustained energy. An example of these tiers, associated classes of ES, and relative timings are summarized in Figure 1 (below).

Fig. 1 – Tiered Energy Storage (ES) in Data Center from System to Grid with Response/Hold-up Timings, courtesy of PowerRox.

The AI Data Centre “Space Race” and ROI Implications

The AI data center “space race” has exposed and amplified the need for integrated ES more than ever. With the overwhelming demand of power driven by racks of GPUs, ES plays a key role in delivering on the return on investment (ROI) expected for the seemingly unlimited investment currently being poured into these deployments. One can break down AI applications into two primary categories: 1) training of large language models (LLM); and 2) utilizing the models for applications such as agentic AI or generating content.

Why LLM Training Demands Bulletproof Power Infrastructure

Training an LLM is achieved by connecting (physically and virtually) many GPUs to act as one super processor, which is very sensitive to even slight disruptions of processing/network connections, and therefore power/voltage sags. Even a momentary drop can render a training run useless even after the investment of days/weeks/months and GWh of energy. Much of the GPU-as-a-Service (GPUaaS) business models are built around tightly-managed scheduling and uptime guarantees. Couple this with the massive capital expenditure (CapEx) of these deployments and it should be fairly obvious to see why there is so much effort spent in ensuring these systems can effectively never go down. ES implemented with intelligent power management (IPM) and various levels of redundancy at each tier ensure the AI data centers are able to achieve their application objectives, and therefore deployment ROI, to be the cash cows the world is currently so excited about.

Grid Stability and Curtailment Compliance

As touched on above, risk associated with MW-loads that can transition from idle to full-load in milliseconds is a direct threat to the stability of the utility grids servicing these loads (along with other constituents on a shared, distributed grid). Jurisdictions hosting these data centers have implemented various laws and regulations regarding curtailment (utility-controlled/initiated load reduction) to facilitate stabilizing grids in periods of load transient disturbance and/or exponentially raise the real-time cost of energy based on exceeding quotas. ES can support these requirements by absorbing these high-transient peaks as well as supplementing bulk power requirements from the grid, thus minimizing grid stress/stability and potential impact to the neighbors.

Sustainability: Green for the Planet, Green for the Pocketbook

The brief mention of sustainability above should also not be glossed over, as being green for the planet can also be increasingly green for the pocketbook. ES enables the world to satisfy that insatiable hunger for AI data center deployments in a way that eeks more performance out of every watt generated, regardless of where/how it is generated. This means making the most out of renewable and less-carbon-friendly energy sources the like. For instance, the GPU clusters that comprise AI data centers must often dump their load for the sake of upstream stability, which is dissipated as heat to literally watch all that investment burn away. ES can store this energy and recycle it.

ES is truly the fundamental value-add to AI data centers built on oceans of demanding GPU racks. Converting the hype cycles into real money and net-positive societal impact will only be achieved via bulletproof data centers that can find the growing power they need and deliver it in a sustainable manner.

Stay Updated with Nyobolt Insights

Subscribe to our free newsletter